New legislation in Australia makes it illegal for those under 16 to have a social media account. To avoid financial penalties, social media companies have scrambled to remove accounts they believe breach the legislation. Notably, there are no consequences for the under-16s who attempt to create an account using a fraudulent age. As the first country to introduce such a ban, Australia has become a bit of a test case: the world is watching to see how effective the legislation is and whether it produces the desired results.

As 2026 has just begun, this also sparks a broader question for internet users and regulators alike: will this be the year the world rethinks identity online?

The status quo doesn’t work – what will?

While Australia's new age rules are rooted in concerns over the well-documented risks children face on social media platforms, the need to change the experience of social media is probably not best served by banning it entirely. The underlying issues remain. Once someone turns 16, is it suddenly acceptable to subject them to the issues they have been shielded from? Surely all people should be protected from harmful content, abuse and other negative experience. History also suggests that banning something causes greater demand. I remember from my own youth when radio stations banned the likes of “Relax” by Frankie Goes to Hollywood – the ban just made everyone listen to it more and helped keep the track at number one in the charts for longer. Denial fuels demand, and in this instance it could exacerbate the issue of online dangers as those under 16 in Australia look for alternatives.

Meanwhile, age-verification legislation in various other countries and in some U.S. states is also attempting to limit access to adult content, bringing about numerous age-verification technologies on websites that need to restrict their content. Some technologies offer real-time age determination based on facial features while others rely on more formal ways, using government-issued identification or financial documents. All of these approaches can create additional privacy concerns, especially around data collection and storage.

Add into this mix the likes of phishing emails, romance scams, financial fraud and all the other various ways that cybercriminals and fraudsters attempt to dupe their victims, and it may raise the question: is the way that internet services and apps work today still fit for purpose?

Also, imagine being told 30 years ago that one day a small device in your pocket would allow you to connect to virtually anyone from anywhere, interact, shop, make reservations, watch TV on demand. But alongside these cool features in your phone, there’s also the ability to bully others and be as abusive as you want without accountability, and even remain anonymous when doing so – or, indeed, be on the receiving end of such behavior. When considered in this way, you might have considered not having one of these devices.

The abuser next door

There may be an assumption that abusive or unwanted behavior on the internet comes from somewhere else: it’s not your neighbor, not someone you know, not even someone from your town – it’s probably Russian bots or someone from afar. However, a recent BBC investigation found that in just one weekend there were 2,000 extremely abusive social media posts directed at managers and players in the Premier League and Women’s Super League, with some being so extreme as to involve threats of death and rape. Identifying the individuals behind the extreme posts is unlikely as there is no formal identification needed when creating social media accounts, and using a VPN makes tracking difficult.

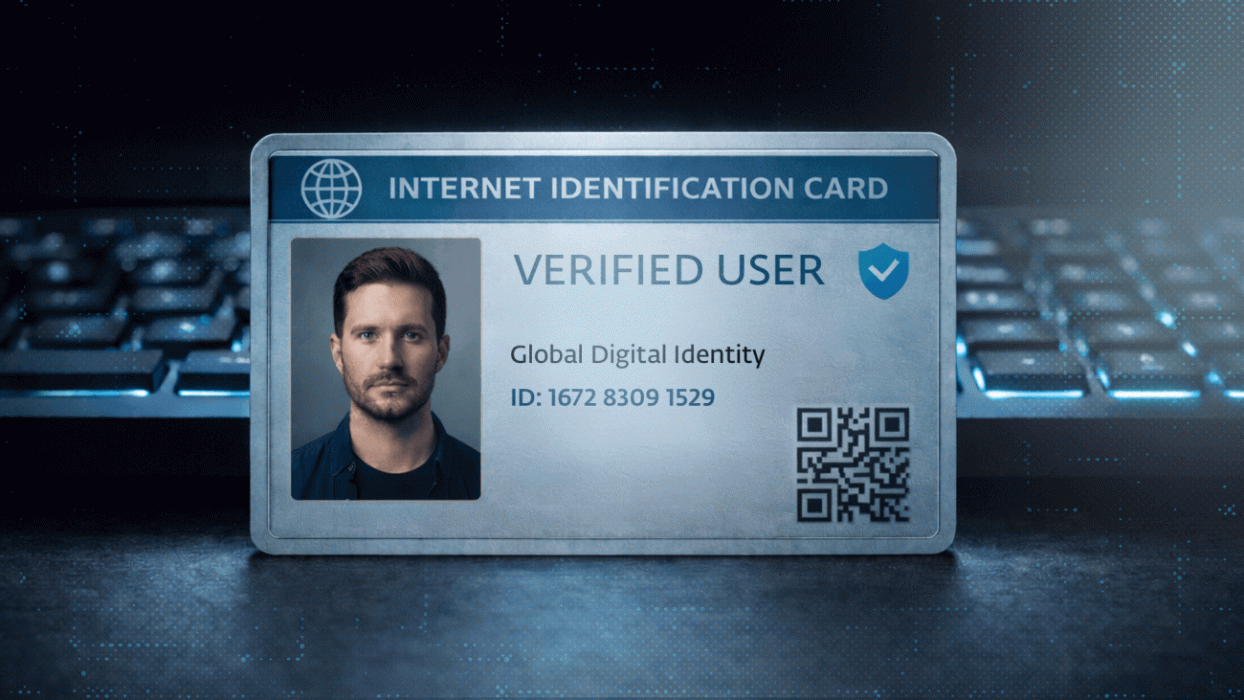

Unless an app or service is operated by a regulated company that requires identity verification, people are free to create accounts on many services using any identity they desire. This option for anonymity has been a core concept of freedom on the internet, although whether this was by design is questionable. The barrier for positive identification when creating an account is that services could ultimately have fewer users – it would introduce friction at account creation, something that companies relying on user numbers to deliver ads and sponsored content want to avoid.

This leads to a broader question: Is it time for a general acceptance that the internet needs to have verified, authenticated users?

I am not suggesting that all services need to have verified users. However, if I could switch off content, posts and communication attempts from non-verified users, then my online experience might improve significantly. The football managers and players in the Premier League could be on social media without being subjected to the torrent of trash and abuse they see today, and if a verified user makes an extreme threat they will face the consequences of law enforcement. Extending this concept so that under-16s could only interact with content from verified users may not completely solve the issues of today, but likely answers the 80/20 rule of removing 80% of the issues.

The benefits are not limited to social media. At present, my email inbox has the option to separate general mailing list email from email messages that need action. Introducing a third filter for unverified senders would also help winnow away possible spear-phishing and targeted attacks. There is, of course, the risk of cybercriminals hijacking verified accounts, so this is not a silver bullet. It does, however, add another layer of protection.

Verified doesn’t equate to visible

Crucially, identity verification doesn’t remove the option of protecting identity. For example, a dating platform may verify the identity of all the subscribers but still allow them to take on any profile identity they choose. The protection comes in knowing that every member on the platform has been verified as a real person and their identity is known to the platform. Any abuse or fraud is then attributable to the individual, allowing the appropriate authorities to take action.

Moving to an internet that distinguishes between verified and non-verified individuals would be a huge reset of the status quo. Claims about limitations of freedom of speech would follow while companies relying on user numbers to demonstrate growth might even need to reset their valuations. However, the concept of verified identities does not silence speech or restrict freedom. What it does is give people the option to filter out the noise and abuse originating from the unverified.

One thing is for certain: the current methods of limiting content by age are not resolving the issue of unwanted, abusive or illegal content. And when it comes to those being prohibited from using social media, the measures are likely to push some of them underground or drive them to circumvent the restrictions, which will potentially be more dangerous and increase risk, rather than reducing it.