One of the best things about the internet is that it’s an expansive repository of knowledge – and this wealth of knowledge is almost never more than a few clicks away. This unfettered access to information brings along its fair share of challenges, however. In today's information age, we are bombarded with so much information that effectively identifying and filtering out content that is fabricated, manipulated or otherwise false and misleading is an increasingly daunting task.

Indeed, it’s become trite to say that you cannot, and should not, take at face value anything you come across online – this includes random articles, social media posts, all the way to commentaries from self-proclaimed ‘experts’. The waters muddy further once you add deepfake content to the mix, as AI-enabled fake audio, images and video clips can easily turbocharge disinformation campaigns.

Speaking of AI-powered trickery, don’t count on ChatGPT and other tools powered by large language models and trained on massive datasets from the internet to always tell the truth or disprove false narratives. It has been shown that they have an uncanny ability for agreeing with falsehoods and validating misconceptions, particularly if asked questions loaded with disinformation, and that ultimately, their power could be harnessed to craft false narratives at a dramatic scale. Another worry is chatbots ‘hallucinating’, i.e. spitting out fabricated answers and references. In other words, their answers need to be scrutinized too – and for good reason.

Poisoning the narrative

Currently, you might notice a trend to misrepresent, disinform, to bend the truth in creative ways – most often to cause polarization among certain groups of people for political gain, or to draw people’s attention for other negative purposes, all mostly happening online. In essence, information online might no longer flow freely, as it gets filtered out and poisoned, to enact control over a narrative, or to create a working narrative in one’s favor, whichever works best.

Bad actors have opted to weaponize this technique to control and change certain pieces of information, distilling facts by inserting fake data points or fake news into online discussions and social media, which then can influence the real world.

For example, some forms of cyberbullying employ disinformation online, with the subjects of said bullying experiencing real psychological and physical trauma. With social media, false rumors can spread outside schools, involving many more people, and causing even more pain for the recipients. Likewise, examples of racism and bigotry seeping into the general population’s psyche from online discourse is a trend anyone can notice, especially during election season, when disparate groups try to politicize certain topics.

The sources of disinformation

Fake news and information can spread through a variety of means. As the above examples suggest, online discourse is one major source, thanks to forums and social media, where anyone, be it a regular person or a bot, can share anything,.

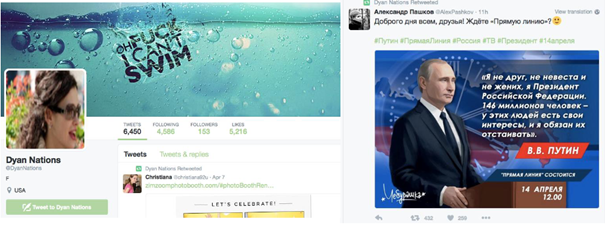

Indeed, bots spreading misinformation have become somewhat of a concern in recent years, with a study from 2018 confirming that Americans, for example, majorly agree with the negative effects of bots spreading misinformation on social media. In fact, according to another report, approximately 47% of the internet’s traffic can be attributed to bots, increasing year-over-year, with social media representing an enormous number – it is more likely that you encounter a bot on Twitter (now X) than a real user, as per a study by the Washington University in St. Louis.

Example of an identified bot spreading disinformation.

(Source: Samuel C. Woolley & Douglas Guilbeault. 2017 "Computational Propaganda in the United States of America: Manufacturing Consensus Online." Oxford Internet Institute, page 7.)

Bots do not need convincing to spread fake information, and the more a dubious article or data point gets shared and is made visible to actual people, the more likely it is to enter regular offline discourse. During the course of Russia’s aggression toward Ukraine, for example, many fake narratives emerged, as troll factories churred and spread their dictated points online, seemingly trying to steer people away from supporting Ukraine, distorting reality by using fake fact-checkers or by spreading out of context pictures and footage.

An account spreading disinformation based on an out of context quote.

(Source: AFP Fact Check)

When picked up by political representatives, such fake news can have even more devastating effects and in itself, can also have some very real consequences, like the January 6 insurrection on the US Capitol building, attributed to an increased amount of politicization, disinformation, and polarization in American society, driven by online activity further emboldened by political extremism.

Forms of fakery

Fake information can spread through many forms and places:

- Articles/Reports: Depending on where you get your news from, some people prefer subjective truths (more biased media) or actual fake sites set up by malicious actors that spread false information.

- Social Media: Here, disinformation can spread in the form of shared articles from various sources like fake news sites, commenters spreading fake news, or pages/groups created to contain said false information to disseminate it among their members, who then share it beyond the group. Of interest are also users masquerading as influential members of society, like politicians or scientists, to be more convincing with their lies.

- Forums and comment sections: Like with social media, it is all about sharing article links, creating threads touting fake information, or through posts doing the same. Polarizing comments spread within online communities like on 4chan can be the drivers of real-world extremism.

- Videos/Images: Any platform used to share video or image content can be used to spread fake information in the form of false reports, malicious event summaries, propaganda hidden in memes, altered images, and biased documentaries, as well as through online personalities that thrive on societal polarization to push their content.

A worrying form is also the use of doctored images, video, or audio deepfakes, which can be even harder to spot as fakes, as evidenced in our recent article about audio deepfakes and their potential misuse in scams. While created for research purposes, the deepfake highlights the dangers of encountering ‘stolen voices,’ as it is very convincing, and shows how free AI tools like this can use people’s likenesses of any form for criminal activity.

How to deal with disinformation or fake news

Thinking through what we see and read online is the best method to counter the influence of fake news. Sadly, critical thinking is often not taught well in schools, but that does not mean it cannot be self-taught at home.

But how exactly do you differentiate between a real story and a fabricated one? Some easy-to-spot clues can help.

- Firstly, stop and think about the information you come across. Blindly believing in what a ‘doctor’ says about the effects of a vaccine or treatment online just because they’re wearing a white lab coat on a video is incorrect, as anyone could play a doctor online. Likewise, consider what sort of a miracle it would really be if a three-eyed baby had really been born this year.

- Secondly, scrutinize and verify everything that you come across. Social media is often used to spread fakery and hoaxes, like made-up army draft papers, calling election results as fraudulent, repurposing movie scenes as actual events, saying all vaccines cause death, and the like. The best way to counter this is to check objective news websites, and to follow fact-checking pages that investigate hoaxes.

- Thirdly, put every data piece into perspective. By using a variety of legitimate sources like those presented in the previous point, read up and create your own opinion. By distilling various viewpoints, a person can create their own positions on critical topics, and with the power of the internet, anyone can get a better understanding of something that they find relevant and interesting.

- Moreover, stay calm and try not to get provoked into because of a clearly biased opinion. While debates can get heated, just like regular bullies, online trolls thrive on provocation. Do not try to legitimize their positions by “taking the bait” as they say.

And finally, we recommend reading the Cybersecurity and Infrastructure Security Agency’s (CISA) brochure on disinformation tactics, as it is a very useful and informative compilation, containing disinformation methods, tactics, and ways how to spot fakes with some real examples. With these methods combined, it should be easier to spot what is real and what isn’t.